Natural Language Processing (NLP)2024 is a field at the intersection of computer science, artificial intelligence, and linguistics. Its primary goal is to enable computers to understand, interpret, and respond to human language in a way that is both meaningful and useful.

1.what is NLP?

NLP involves the interaction between computers and humans using natural language. It encompasses a range of processes that allow machines to read, decipher, and understand the nuances of human languages.

Sentiment Analysis is a subfield of computer science and artificial intelligence (AI) that uses machine learning to enable computers to understand and communicate with human language.

NLP enables computers and digital devices to recognize, understand and generate text and speech by combining computational linguistics—the rule-based modeling of human language—together with statistical modeling, machine learning and deep learning.

NLP research has helped enable the era of generative AI, from the communication skills of large language models (LLMs) to the ability of image generation models to understand requests. NLP is already part of everyday life for many, powering search engines, prompting chatbots for customer service with spoken commands, voice-operated GPS systems and question-answering digital assistants on smartphones such as Amazon’s Alexa, Apple’s Siri and Microsoft’s Cortana.

NLP also plays a growing role in enterprise solutions that help streamline and automate business operations, increase employee productivity and simplify business processes.

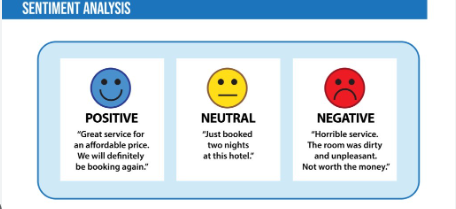

Sentiment Analysis

What is Sentiment Analysis?

Sentiment analysis is a subfield of NLP that focuses on identifying and extracting subjective information from text data. It is used to determine the emotional tone behind a series of words, helping to understand the attitudes, opinions, and emotions expressed within an online mention or piece of text.

Importance of Sentiment Analysis

Sentiment analysis is essential for businesses and organizations to monitor and assess public sentiment about products, services, or events. It enables them to:

- Gain customer insights: Understand how users feel about a product or service.

- Monitor brand reputation: Keep track of brand mentions and manage crises.

- Improve products: Analyze feedback for improvement.

- Automate feedback processing: Analyze large volumes of feedback automatically.

How Sentiment Analysis Works

Sentiment analysis typically involves several key steps:

- Data Collection:

- Text data is collected from various sources, such as social media, customer reviews, surveys, and news articles.

- Text Preprocessing:

- Tokenization: Splitting text into words or phrases.

- Lowercasing: Converting all text to lowercase for uniformity.

- Stop Words Removal: Removing common words (e.g., the, is, and) that do not contribute significantly to sentiment.

- Stemming and Lemmatization: Reducing words to their base or root form.

- Noise Removal: Eliminating characters like punctuation and numbers that do not add value.

- Feature Extraction:

- Bag of Words (BoW): Creating a representation of the text by counting the occurrence of each word.

- TF-IDF (Term Frequency-Inverse Document Frequency): Weighing words based on their importance in the document relative to the entire dataset.

- Word Embeddings: Using models like Word2Vec or GloVe to create vector representations that capture semantic meaning.

- Modeling:

- Machine Learning Algorithms:

- Naive Bayes Classifier: A probabilistic algorithm that works well for text classification.

- Support Vector Machines (SVM): Often used for binary classification tasks.

- Logistic Regression: A simple and effective algorithm for text classification.

- Deep Learning Techniques:

- Recurrent Neural Networks (RNNs): Useful for capturing sequential dependencies in text.

- LSTM (Long Short-Term Memory): A type of RNN that can learn long-term dependencies and handle context better.

- Transformers (e.g., BERT, GPT): Advanced models that excel in understanding context and handling complex language structures.

- Machine Learning Algorithms:

- Prediction and Output:

- The trained model classifies text as positive, negative, or neutral, or assigns a score to indicate the strength of the sentiment.

Types of Sentiment Analysis

- Fine-Grained Sentiment Analysis:

- Assigns a specific sentiment score or category (e.g., very positive, positive, neutral, negative, very negative).

- Emotion Detection:

- Identifies specific emotions such as happiness, anger, sadness, and surprise.

- Aspect-Based Sentiment Analysis:

- Determines the sentiment towards specific aspects of an entity (e.g., product features).

- Multilingual Sentiment Analysis:

- Analyzes text in different languages, which may involve specific preprocessing steps and models.

Challenges in Sentiment Analysis

- Sarcasm and Irony:

- Sarcastic comments can reverse the meaning of words, making it difficult for models to detect the true sentiment (e.g., “Oh, great! Another traffic jam.”).

- Context Dependence:

- Words can have different meanings based on context (e.g., “The film was sick” could mean amazing or unwell).

- Negation Handling:

- Phrases with negation words (e.g., not good, never happy) can be tricky for models to interpret accurately.

- Language and Cultural Nuances:

- Sentiment expressions can vary across different cultures and languages.

- Data Imbalance:

- Sentiment analysis models may perform poorly if the training data is imbalanced (e.g., more positive samples than negative ones).

Tools and Libraries for Sentiment Analysis

- Python Libraries:

- NLTK (Natural Language Toolkit): Provides a range of NLP functionalities, including sentiment analysis.

- TextBlob: Simplifies NLP tasks and has a built-in sentiment analysis tool.

- VADER (Valence Aware Dictionary and sEntiment Reasoner): Good for analyzing sentiments in social media text.

- spaCy: An efficient library for NLP tasks that can be integrated with third-party sentiment analysis tools.

- Hugging Face Transformers: State-of-the-art models such as BERT and GPT for sentiment analysis.

- APIs:

- Google Cloud Natural Language API

- IBM Watson Natural Language Understanding

- Microsoft Azure Text Analytics API

Real-World Applications of Sentiment Analysis

- Social Media Monitoring:

- Brands use sentiment analysis to track public sentiment and gauge the impact of marketing campaigns or public relations efforts.

- Customer Service:

- Automated sentiment analysis in chatbots helps prioritize and route tickets based on sentiment.

- Market Research:

- Companies leverage sentiment analysis to understand consumer opinions and trends.

- Political Sentiment Analysis:

- Analyze social media discussions, news, and articles to assess public sentiment towards politicians or policies.

- Stock Market Prediction:

- Sentiment analysis is used to analyze financial news and social media for forecasting stock market trends.

Future of Sentiment Analysis

- Increased Accuracy:

- As models evolve, sentiment analysis is expected to handle more nuanced language and complex emotions.

- Real-Time Analysis:

- With improved computational power, real-time sentiment analysis for live events and streams will become more prevalent.

- Integration with AI-Powered Applications:

- Enhanced integration in AI applications, including virtual assistants and automated customer feedback systems.

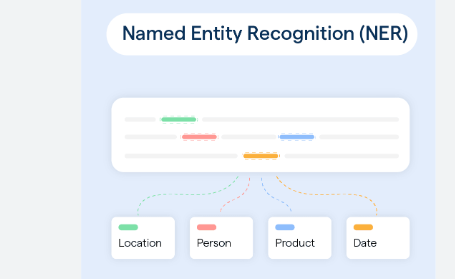

Named Entity Recognition (NER)

What is Named Entity Recognition (NER)?

Named Entity Recognition (NER) is a subtask of NLP that involves identifying and classifying named entities in text into predefined categories. These categories include, but are not limited to, names of people, organizations, locations, dates, numerical expressions, and more.

Example:

- Sentence: “John works at Google and lives in New York.”

- NER Output:

- John: Person

- Google: Organization

- New York: Location

Importance of NER

NER is critical for various applications where understanding and extracting specific information from text is essential. It allows for:

- Information Extraction: Quickly identifying key pieces of data in large documents.

- Content Classification: Tagging and organizing content in a way that makes it easy to search and analyze.

- Question Answering Systems: Enhancing the ability to respond accurately by pinpointing relevant entities.

- Data Preprocessing for Machine Learning: Assisting in creating structured data from unstructured text.

How NER Works

NER typically involves two main processes:

- Entity Detection: Locating the entity in the text.

- Entity Classification: Assigning a label to the detected entity based on its type (e.g., person, location, date).

Steps in NER Implementation

- Text Preprocessing:

- Tokenization: Splitting text into individual words or phrases.

- Lowercasing: Converting text to lowercase to ensure uniformity.

- Noise Removal: Removing non-alphanumeric characters and irrelevant information.

- Feature Extraction:

- Extracting linguistic and structural features from the text, such as word shape, POS tags, and neighboring word context.

- Modeling Approaches:

- Rule-Based Systems: Manually crafted rules and patterns using regular expressions.

- Machine Learning Models:

- Conditional Random Fields (CRFs): Widely used for sequence labeling tasks.

- Hidden Markov Models (HMMs): Probabilistic models that consider sequences of words.

- Deep Learning Approaches:

- Recurrent Neural Networks (RNNs) and LSTMs (Long Short-Term Memory): Useful for learning sequential dependencies in text.

- Transformers (e.g., BERT): These models capture contextualized word embeddings that significantly enhance NER performance.

- Training and Evaluation:

- The model is trained on labeled datasets where entities are marked with their corresponding labels.

- Evaluation metrics include Precision, Recall, and F1 Score to assess the effectiveness of the NER system.

Pre-Trained Models for NER

There are various pre-trained models and libraries that facilitate the implementation of NER:

- spaCy: An open-source library with built-in capabilities for NER and various NLP tasks. SpaCy’s models are known for their efficiency and accuracy.

- NLTK (Natural Language Toolkit): Includes basic NER capabilities and tools for building custom NER models.

- Stanford NER: A Java-based tool from the Stanford NLP Group that provides a comprehensive suite for NER.

- Hugging Face Transformers: Includes pre-trained transformer models like BERT, RoBERTa, and GPT that can be fine-tuned for NER tasks.

Example Using spaCy:

pythonCopy codeimport spacy

# Load the spaCy model for NER

nlp = spacy.load("en_core_web_sm")

# Process a sample text

text = "Elon Musk founded SpaceX and Tesla in California."

doc = nlp(text)

# Print named entities

for ent in doc.ents:

print(ent.text, ent.label_)

Output:

Copy codeElon Musk PERSON

SpaceX ORG

Tesla ORG

California GPE

Challenges in NER

- Ambiguity:

- Words can have multiple meanings (e.g., “Apple” as a company vs. “apple” as a fruit).

- Out-of-Vocabulary (OOV) Entities:

- New or rare entities not present in the training data may be difficult for models to recognize.

- Domain-Specific Entities:

- General NER models may not perform well on domain-specific text (e.g., medical or legal documents) without customization.

- Multi-Word Entities:

- Correctly identifying entities made up of multiple words (e.g., “New York University”) is more complex.

- Context Sensitivity:

- Context can change the classification of an entity (e.g., “Washington” could be a person, a place, or an organization).

Applications of NER

- Information Retrieval:

- Extracting specific data from large texts for building knowledge bases.

- Customer Support Automation:

- Recognizing entities to automate ticket routing and response generation.

- Healthcare and Medical Research:

- Extracting information such as drug names, diseases, and medical procedures from research papers.

- News Summarization:

- Identifying key figures, organizations, and locations to create concise summaries.

- Finance and Market Analysis:

- Extracting names of companies, financial instruments, and events from financial reports and news.

Future Trends in NER

- Improved Contextual Understanding:

- With the advent of transformer models, NER is advancing in understanding complex contexts and relationships between entities.

- Multilingual NER:

- Enhanced models are being developed to support entity recognition in multiple languages.

- Cross-Domain Transfer Learning:

- Training models on one domain and adapting them to work effectively in another.

- Real-Time NER:

- The development of models that can perform NER on live data streams for applications such as social media monitoring.

Text Generation

What is Text Generation?

Text generation in NLP refers to the automated process of creating meaningful and coherent text using algorithms and models. This task involves generating human-like text based on a given input, prompt, or set of instructions. It encompasses generating various types of content, including stories, articles, summaries, dialogues, and code.

Importance of Text Generation

Text generation has become increasingly significant with the growth of artificial intelligence and its applications in various fields. The ability to generate high-quality text has opened doors to advancements in:

- Content Creation: Automating the production of articles, reports, and marketing copy.

- Conversational AI: Powering chatbots and virtual assistants to provide more natural interactions.

- Creative Writing: Assisting authors in generating plot ideas or expanding on storylines.

- Programming Help: Generating code snippets or helping developers with automated code suggestions.

- Personalized Communication: Tailoring emails, messages, and recommendations for users.

How Text Generation Works

Text generation typically involves the following steps:

- Input Processing:

- The input can be a seed word, a prompt, or a partial sentence that guides the generation process.

- Model Selection:

- The choice of model significantly influences the output quality. Different types of models are used for text generation:

- Markov Chains: A simple probabilistic model that predicts the next word based on the previous one or two words.

- Recurrent Neural Networks (RNNs): Capable of handling sequential data and maintaining context over short sequences.

- Long Short-Term Memory Networks (LSTMs): An advanced version of RNNs designed to capture long-term dependencies.

- Transformers (e.g., GPT, BERT): Modern architectures that use attention mechanisms for parallel processing and context capture across longer text sequences.

- The choice of model significantly influences the output quality. Different types of models are used for text generation:

- Training and Fine-Tuning:

- Models are trained on large corpora of text data. Fine-tuning can be done using domain-specific data to tailor the generation to a particular use case.

- Generation Strategy:

- Greedy Search: Selects the most probable word at each step. It is fast but may lead to less coherent outputs.

- Beam Search: Evaluates multiple potential outputs and selects the most likely sequence.

- Temperature Sampling: Controls the randomness of the generated text. Lower temperatures make the output more deterministic, while higher temperatures introduce more creativity.

- Top-k Sampling: Limits the sampling pool to the top k most likely words at each step.

- Top-p (Nucleus) Sampling: Selects words from a dynamically sized pool that covers a specified probability mass (e.g., top 90%).

- Output Generation:

- The model generates words or tokens iteratively until a stopping condition is met (e.g., end of sentence or maximum token limit).

Popular Text Generation Models

- GPT (Generative Pre-trained Transformer):

- Developed by OpenAI, GPT models (e.g., GPT-2, GPT-3) are state-of-the-art text generators that produce human-like text based on transformer architecture. GPT-3, in particular, can generate entire essays, conversations, and code snippets with impressive fluency.

- T5 (Text-To-Text Transfer Transformer):

- A model by Google that treats every NLP task as a text-to-text problem, making it versatile for both generating and processing text.

- BERT (Bidirectional Encoder Representations from Transformers):

- Although BERT is mainly used for text understanding and analysis rather than generation, it has inspired numerous other models with its transformer-based architecture.

- XLNet:

- An improvement over BERT and other transformer models, XLNet allows for better handling of long-term dependencies by leveraging a permutation-based training approach.

- CTRL (Conditional Transformer Language Model):

- Developed by Salesforce, this model allows users to guide the generated text using control codes, enhancing directed output.

Techniques for Effective Text Generation

- Prompt Engineering:

- Carefully designing input prompts can significantly improve the output quality of text generation models. The prompt should be specific and guide the model on what kind of output is expected.

- Fine-Tuning:

- Fine-tuning a pre-trained model on specific data helps generate content aligned with a particular domain, such as medical reports, technical documentation, or news articles.

- Contextual Awareness:

- Ensuring that the model captures enough context by using sufficiently large input windows and leveraging models like transformers, which can process longer text efficiently.

- Human-in-the-Loop:

- Combining automated generation with human oversight ensures that the final content meets quality standards and adheres to ethical considerations.

Applications of Text Generation

- Chatbots and Conversational Agents:

- Generating responses for customer service chatbots and AI companions.

- Content Creation:

- Assisting with writing blogs, articles, and creative stories.

- Email Automation:

- Composing automated email replies and marketing content.

- Programming and Code Generation:

- Tools like GitHub Copilot use language models to assist developers by generating code snippets and auto-completing lines of code.

- Summarization and Paraphrasing:

- Condensing lengthy documents into concise summaries or rephrasing content for different audiences.

Challenges in Text Generation

- Coherence and Relevance:

- Maintaining logical flow and relevance throughout a longer piece of text can be difficult. Models sometimes produce content that, while syntactically correct, may lack meaningful connections between sentences.

- Bias in Training Data:

- Language models can inadvertently reproduce biases present in the training data, leading to problematic or inappropriate outputs.

- Creativity vs. Fact:

- Models might generate creative but factually incorrect statements, which can be problematic in applications requiring accuracy.

- Computational Resources:

- Training and running large language models require significant computational power and memory, limiting accessibility.

- Ethical Concerns:

- Text generation models can be used to create misleading information, spam, or offensive content, raising concerns about responsible use and regulation.

Future of Text Generation

- Improved Context Handling:

- Enhanced models that capture and maintain context over longer text inputs to produce more coherent outputs.

- Ethical AI Development:

- Stricter guidelines and frameworks to minimize biases and ensure responsible text generation.

- Real-Time Applications:

- More efficient algorithms that support real-time text generation for applications such as live chat and real-time transcription.

- Multimodal Integration:

- Combining text generation with other data types, such as images and audio, for richer user experiences.

Chatbots

What are Chatbots?

Chatbots are AI-driven software applications that interact with users using natural language. These conversational agents can simulate human conversation through text or voice and are commonly integrated into websites, mobile apps, and messaging platforms.

Types of Chatbots

- Rule-Based Chatbots:

- Operate on predefined scripts and patterns.

- Respond to specific keywords and follow a set sequence of questions and responses.

- Limited in flexibility and cannot handle complex or unexpected queries.

- Example: FAQ bots that provide answers to common questions.

- AI-Powered Chatbots:

- Use machine learning and NLP to understand and generate responses.

- Capable of learning from user interactions and improving over time.

- More flexible and capable of handling a wide range of questions.

- Example: Virtual assistants like Siri, Alexa, and Google Assistant.

How Chatbots Work

Chatbots use a combination of NLP and other machine learning techniques to understand and generate human-like responses. The workflow typically involves:

- Text Processing:

- Tokenization: Breaking down input text into words or phrases.

- POS Tagging: Identifying parts of speech in the input text.

- Named Entity Recognition (NER): Detecting and classifying named entities (e.g., names, dates, locations).

- Dependency Parsing: Analyzing grammatical relationships between words.

- Understanding Intent:

- Chatbots use intent recognition to determine the goal behind a user’s message (e.g., booking a flight, checking the weather).

- Machine Learning Models: Algorithms like Support Vector Machines (SVM), neural networks, or transformer-based models are used for intent classification.

- Response Generation:

- Simple chatbots use predefined templates for responses.

- Advanced chatbots use models like GPT-3 to generate dynamic and contextually relevant replies.

- Response generation can also involve API calls or integration with databases for providing accurate information.

- Natural Language Generation (NLG):

- The process of converting structured data or the chatbot’s intent into human-readable text.

- Techniques include rule-based templating, statistical methods, or deep learning models.

Applications of Chatbots

- Customer Service:

- Providing instant support for queries, troubleshooting, and information about products and services.

- E-commerce:

- Assisting with product recommendations, order tracking, and payments.

- Healthcare:

- Scheduling appointments, providing basic medical information, and triaging symptoms.

- Education:

- Offering tutoring, answering questions, and helping with language learning.

- Banking and Finance:

- Managing transactions, checking account details, and offering financial advice.

Challenges in Chatbot Development

- Understanding Complex Queries:

- Handling ambiguous or multi-intent queries is difficult for some models.

- Maintaining Context:

- Keeping track of context over long conversations is challenging, though transformer models have improved in this area.

- Language Nuances:

- Sarcasm, idioms, and regional dialects can confuse even sophisticated chatbots.

- Data Privacy:

- Ensuring user data security and compliance with regulations like GDPR.

Machine Translation

What is Machine Translation?

Machine translation (MT) refers to the automatic conversion of text from one language to another using NLP algorithms. The goal is to preserve the meaning, tone, and context of the original content.

Types of Machine Translation

- Rule-Based Machine Translation (RBMT):

- Uses linguistic rules and bilingual dictionaries to translate text.

- Effective for simple, structured sentences but struggles with complex or idiomatic language.

- Statistical Machine Translation (SMT):

- Relies on statistical models to find the most probable translation based on large corpora of bilingual text.

- The quality of translations depends on the size and quality of the training data.

- Neural Machine Translation (NMT):

- Utilizes deep learning models, such as recurrent neural networks (RNNs) and transformers, to produce more fluent and natural translations.

- The most advanced and effective approach for modern machine translation.

- Examples include Google Translate, DeepL, and Microsoft Translator.

How Neural Machine Translation Works

- Encoder-Decoder Architecture:

- The input text is passed through an encoder, which converts it into a fixed-size context vector.

- The decoder then generates the translated text word-by-word, based on this context vector.

- Attention Mechanism: Improves translation by allowing the model to focus on specific parts of the input sequence for better context handling.

- Transformer Models:

- Introduced in the paper “Attention Is All You Need”, transformers use self-attention mechanisms to process words in parallel and capture long-term dependencies.

- BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer) are examples of transformer architectures used in NLP, though GPT is more focused on text generation.

- Transformers in Translation: Tools like Google Translate have integrated transformer models for high-quality translations.

Applications of Machine Translation

- Cross-Language Communication:

- Breaking language barriers for global collaboration and communication.

- Content Localization:

- Translating websites, software interfaces, and marketing content for different regions.

- Education and Research:

- Enabling access to research papers and educational material in various languages.

- Customer Support:

- Providing multilingual support for users around the world.

Challenges in Machine Translation

- Context Preservation:

- Translating ambiguous words or sentences without losing their intended meaning.

- Idiomatic Expressions:

- Handling idioms, slang, and culturally specific phrases.

- Low-Resource Languages:

- Lack of data for training models in less common languages.

- Homonyms and Polysemy:

- Words with multiple meanings can be challenging for models to translate correctly without sufficient context.

Future of Machine Translation

- Multilingual NMT Models:

- Development of models that can translate between multiple languages without needing separate training for each language pair.

- Context-Aware Translations:

- Enhanced models that understand broader context across paragraphs and documents.

- Real-Time Translation:

- Continued improvement in real-time translation for spoken language in applications like live meetings and video calls.

- Low-Resource Language Support:

- Efforts to develop better translation capabilities for languages with limited training data through techniques like transfer learning.

conclusion

Natural Language Processing (NLP) has become an integral part of modern technology, enabling machines to understand, interpret, and generate human language. Each subfield within NLP—sentiment analysis, named entity recognition (NER), text generation, chatbots, and machine translation—plays a significant role in enhancing our interaction with technology and broadening its applications across industries.

Sentiment analysis allows businesses and organizations to gauge public opinion and customer sentiment efficiently, aiding in better decision-making and customer relationship management. Its ability to analyze emotions expressed in text provides valuable insights into user behavior and market trends.

Named Entity Recognition (NER) transforms unstructured text into structured information by identifying and classifying specific entities, such as names, places, and dates. NER is a foundation for many NLP applications, including search engines, recommendation systems, and information retrieval, making data analysis more targeted and efficient.

Text generation has revolutionized content creation and automation. From writing articles and generating creative content to supporting code generation for developers, advancements in text generation have shown how powerful AI-driven tools can be in assisting and augmenting human tasks. Models such as GPT and T5 demonstrate the potential for creating contextually relevant and human-like text, with applications spanning various domains.

Chatbots have significantly improved customer service and user interactions by automating responses and simulating human-like conversations. AI-powered chatbots provide real-time assistance and enhance user experiences, driving efficiency in sectors like e-commerce, healthcare, finance, and customer support. Their ability to learn and adapt through machine learning and NLP advancements has made them more dynamic and context-aware.

Machine translation bridges language barriers, making global communication and content localization more accessible than ever. By leveraging neural machine translation (NMT) and transformer models, machine translation tools can deliver accurate and fluent translations, fostering international collaboration and inclusivity. Although challenges like idiomatic expressions and low-resource languages persist, continuous improvements in NMT models and context-aware algorithms are paving the way for even more reliable translations.

Together, these NLP technologies are reshaping how we interact with machines, consume content, and connect with one another across languages and platforms. Despite the challenges of handling nuances, context, and ethical considerations, the rapid advancements in NLP and AI promise a future where these tools will be even more accurate, contextually aware, and seamlessly integrated into our everyday lives. As research and innovation continue, NLP will become an even more powerful tool for enhancing productivity, enabling real-time communication, and enriching user experiences across the globe.