Artificial intelligence (AI) has become a crucial part of our lives, powering everything from search engines to medical diagnostics. However, the ethical considerations surrounding AI, particularly biases in machine learning, can have profound social implications. This blog will explore key areas such as fairness in machine learning, bias detection and mitigation, and responsible AI practices.

AI Ethics and Bias: Ensuring Fairness, Transparency, and Responsibility in Machine Learning 2024

What are AI Ethics?

AI ethics refers to the moral principles guiding the design, development, and deployment of artificial intelligence systems. These principles are typically centered around transparency, fairness, accountability, and privacy. By adhering to a set of ethical guidelines, data practitioners can ensure that AI systems respect human rights, protect users’ privacy, and promote social good. Key areas of focus within AI ethics include bias and fairness, transparency, accountability, privacy, and security.

1. Understanding Fairness in Machine Learning

Fairness in machine learning refers to designing algorithms that do not unfairly discriminate against individuals or groups. Biases, whether they are historical, societal, or algorithmic, can lead to unfair outcomes.

Key Concepts:

- Group Fairness: Ensuring equal treatment for groups defined by sensitive attributes like race or gender.

- Individual Fairness: Similar individuals should receive similar outcomes.

- Statistical Parity: A criterion where outcomes for different groups should have the same distribution.

Mathematical Representation: To achieve statistical parity, an algorithm must satisfy:

P(Y = 1 | A = a1) = P(Y = 1 | A = a2)

. Bias Detection in Machine Learning Models

Bias detection involves identifying disparities in model predictions across different demographic groups.

Steps to Detect Bias:

- Data Analysis: Check for imbalances in training data that could propagate bias.

- Model Auditing: Evaluate model outputs for various sensitive attributes.

- Fairness Metrics:

- Disparate Impact: A measure comparing the rate of positive outcomes between groups.

HTML Code for Disparate Impact

DI = P(Y = 1 | A = a1) / P(Y = 1 | A = a2)

A DI value below 0.8 indicates potential bias, as per the Four-Fifths Rule.

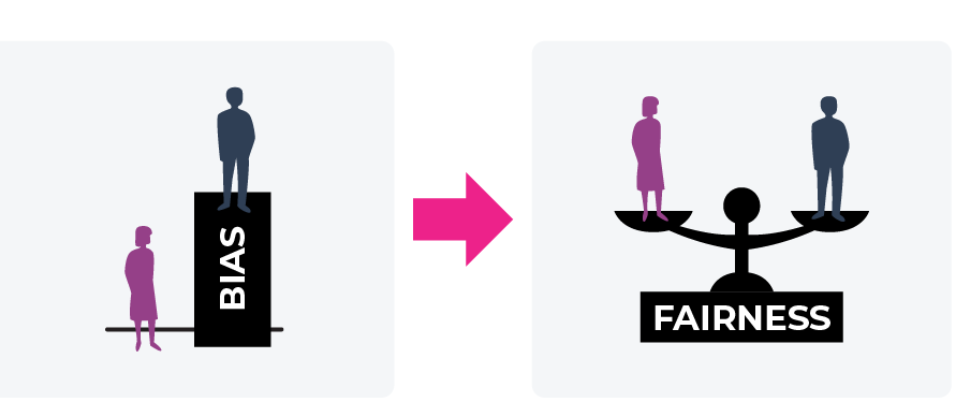

Bias and Fairness in Machine Learning

Bias and fairness are central to AI ethics, as they directly impact the equitable treatment of different groups by AI systems. Model fairness refers to the equitable treatment of different groups by an AI system. Ensuring fairness is essential for creating AI solutions that are not only effective but also ethical. Biased AI can lead to discriminatory outcomes, perpetuating or exacerbating existing inequalities. Ensuring fairness in AI involves addressing bias at various stages of the AI development process, from diverse data collection and preprocessing to the implementation of fairness-aware algorithms and ongoing evaluation. By focusing on fairness, AI developers can create more inclusive and equitable systems that better serve the needs of all users.

3. Bias Mitigation Techniques

Several strategies can be implemented to reduce bias and improve fairness in machine learning models.

Common Techniques:

- Pre-processing: Modifying the training data to remove biases.

- In-processing: Incorporating fairness constraints or penalties during model training.

- Post-processing: Adjusting predictions after training.

Example Algorithm: Reweighting Training Samples To mitigate bias, training samples can be reweighted to ensure balanced representation:

Sample_Weight = 1 / (Group_Size)

4. Responsible AI Practices

Responsible AI involves ethical guidelines and practices that ensure the development and deployment of AI align with social norms and values.

Best Practices for Responsible AI:

- Transparency: Making the data, model, and decision process clear.

- Accountability: Ensuring there are mechanisms in place for auditing and recourse.

- Inclusive Design: Considering diverse perspectives during AI development.

Frameworks and Tools:

- LIME (Local Interpretable Model-agnostic Explanations): Provides local explanations for model predictions.

- SHAP (SHapley Additive exPlanations): A method to attribute the contribution of each feature to the prediction.

HTML Code for SHAP Representation:

Where f(x) is the prediction for a given input, and E[f(X)] is the average prediction over all instances.

Transparency

Transparency is another key area of AI ethics, as it pertains to the openness and clarity with which AI systems operate. Ensuring that AI models are transparent helps to maintain trust, facilitate understanding, and promote accountability. However, the complexity of many AI systems, particularly deep learning models, presents challenges to achieving full transparency. Strategies for increasing transparency include creating interpretable models, using explainable AI techniques, and providing clear documentation of the development process and decision-making criteria. By prioritizing transparency, AI developers can foster greater trust and understanding of AI systems among users and stakeholders.

Consequences of Unethical Artificial Intelligence

The consequences of unethical AI practices are far-reaching and can significantly impact individuals, communities, and society as a whole. Understanding these consequences is essential for appreciating the importance of prioritizing ethical considerations in AI development.

Discrimination and inequality are among the most concerning consequences of unethical AI. Biased AI systems can lead to discriminatory outcomes that disproportionately affect certain groups based on factors such as race, gender, or socioeconomic status. This can further exacerbate existing inequalities and hinder social progress. For example, biased hiring algorithms may result in unfair treatment of minority candidates, while biased lending algorithms could deny loans to deserving individuals from underprivileged backgrounds. By perpetuating and reinforcing these inequalities, unethical AI practices can create barriers to social and economic mobility for marginalized groups.

Misinformation and manipulation represent additional risks associated with unethical AI applications. AI-generated deepfakes, for example, can create realistic but false images or videos that can deceive and mislead people. This poses a serious threat to democratic processes and undermines the credibility of information sources. To address this challenge, AI developers must focus on creating systems that promote the integrity and accuracy of information, rather than facilitating the spread of false or misleading content.

Another consequence of unethical AI is the reinforcement of harmful stereotypes. AI systems that are trained on biased data can perpetuate and reinforce harmful stereotypes, potentially entrenching existing inequalities. For example, an AI-driven advertising system may serve ads based on gender stereotypes, reinforcing traditional gender roles and further entrenching inequality. Addressing this issue requires a commitment to fairness and the development of AI systems that challenge, rather than reinforce, harmful stereotypes.

Finally, unethical AI practices can lead to a lack of accountability, making it difficult to pinpoint responsibility for harm caused by AI systems. This can result in legal challenges and a lack of recourse for affected individuals or communities, further exacerbating the negative consequences of unethical AI. To address this issue, clear lines of accountability must be established for AI developers, users, and organizations, ensuring that ethical standards are upheld and potential harms are addressed in a timely manner.

Conclusion

Ensuring AI systems are fair, unbiased, and ethical is critical for building trust and fostering societal acceptance. By applying bias detection and mitigation techniques and following responsible AI practices, we can work toward more equitable and just machine learning systems.