Transfer Learning: Accelerating Model Development with Pre-trained Networks 2024

http://Transfer Learning: Accelerating Model Development with Pre-trained Networks 2024

Introduction

Transfer learning is a powerful technique in machine learning that involves leveraging a pre-trained model and adapting it to perform a related but different task. This approach can significantly reduce training time, especially when the new task has limited data. It is particularly effective in applications such as image recognition, natural language processing, and more.

What is Transfer Learning?

Transfer learning utilizes a model that has already been trained on a large dataset and adapts it for another specific task. The idea is that the pre-trained model has already learned general features that can be useful for solving a similar problem with less data and computational resources ,Transfer learning allows knowledge transfer from one model to another. The pre-trained model already has learned general features, which can be fine-tuned or adapted to a new, specific task. This approach saves both time and computational resources, allowing faster development of efficient models.

How Does Transfer Learning Work?

The basic steps for applying transfer learning include:

- Choosing a Pre-trained Model: Selecting a model pre-trained on a large dataset, such as ImageNet for image classification tasks.

- Freezing Initial Layers: Retaining the learned weights of the pre-trained model and freezing them to prevent updates during training.

- Adding New Layers: Adding task-specific layers (e.g., dense layers) to the model for the new classification or regression task.

- Fine-tuning: Optionally unfreeze some pre-trained layers and adjust their weights during training for improved performance on the new task.Key Benefits of Transfer Learning

Key Benefits of Transfer Learning

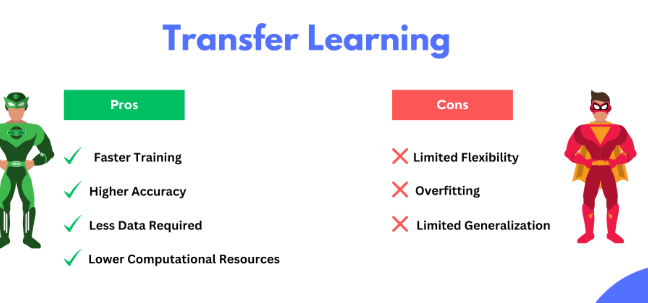

- Faster Training: Since the initial feature extraction is already done, training the model takes significantly less time.

- Better Performance with Limited Data: Transfer learning enables efficient model training even with a small dataset.

- Lower Computational Requirements: Leveraging a pre-trained model reduces the need for extensive computing power compared to training from scratch.

Advantages and disadvantages of transfer learning

Advantages

– Computational costs. Transfer learning reduces the requisite computational costs to build models for new problems. By repurposing pretrained models or pretrained networks to tackle a different task, users can reduce the amount of model training time, training data, processor units, and other computational resources. For instance, a fewer number of epochs—i.e. passes through a dataset—may be needed to achieve a desired learning rate. In this way, transfer learning can accelerate and simplify model training processes.

– Dataset size. Transfer learning particularly helps alleviate difficulties involved in acquiring large datasets. For instance, large language models (LLMs) require large amounts of training data to obtain optimal performance. Quality publicly available datasets can be limited, and producing sufficient manually labelled data can be time-consuming and expensive.

– Generalizability. While transfer learning aids model optimization, it can further increase a model’s generalizability. Because transfer learning involves retraining an existing model with a new dataset, the retrained model will consist of knowledge gained from multiple datasets. It will potentially display better performance on a wider variety of data than the initial base model trained on only one type of dataset. Transfer learning can thus inhibit overfitting.4

Of course, the transfer of knowledge from one domain to another cannot offset the negative impact of poor-quality data. Preprocessing techniques and feature engineering, such as data augmentation and feature extraction, are still necessary when using transfer learning.

Disadvantages

It is less the case that there are disadvantages inherent to transfer learning than that there are potential negative consequences that result from its misapplication. Transfer learning works best when three conditions are met:

- both learning tasks are similar

- source and target datasets data distributions do not vary too greatly

- a comparable model can be applied to both tasks

When these conditions are not met, transfer learning can negatively affect model performance. Literature refers to this as negative transfer. Ongoing research proposes a variety of tests for determining whether datasets and tasks meet the above conditions, and so will not result in negative transfer.5 Distant transfer is one method developed to correct for negative transfer that results from too great a dissimilarity in the data distributions of source and target datasets.6

Note that there is no widespread, standard metric to determine similarity between tasks for transfer learning. A handful of studies, however, propose different evaluation methods to predict similarities between datasets and machine learning tasks, and so viability for transfer learning.7

Benefits of Transfer Learning

- Reduced Training Time: By using a pre-trained model, the initial phase of learning basic features is bypassed, making training faster.

- Better Performance with Limited Data: Transfer learning is especially useful when the new dataset is small or limited, as the model already has learned representations.

- Generalization: The model can generalize better due to its exposure to a large, diverse dataset during initial training.

Code Example for Transfer Learning with Keras

Here’s a simple code snippet in HTML format for fine-tuning a pre-trained model using Keras:

from tensorflow.keras.applications import VGG16

from tensorflow.keras import models, layers, optimizers

# Load the pre-trained VGG16 model without the top layers

base_model = VGG16(weights='imagenet', include_top=False, input_shape=(224, 224, 3))

# Freeze the layers of the pre-trained model

for layer in base_model.layers:

layer.trainable = False

# Add custom layers for the new task

model = models.Sequential()

model.add(base_model)

model.add(layers.Flatten())

model.add(layers.Dense(256, activation='relu'))

model.add(layers.Dropout(0.5))

model.add(layers.Dense(1, activation='sigmoid')) # Adjust for binary classification

# Compile the model

model.compile(optimizer=optimizers.Adam(learning_rate=0.001),

loss='binary_crossentropy',

metrics=['accuracy'])

# Train the model (example)

history = model.fit(train_data, train_labels, epochs=10, validation_split=0.2)

Transfer learning use cases

There are many applications of transfer learning in real-world machine learning and artificial intelligence settings. Developers and data scientists can use transfer learning to aid in a myriad of tasks and combine it with other learning approaches, such as reinforcement learning.

Natural language processing

One salient issue affecting transfer learning in NLP is feature mismatch. Features in different domains can have different meanings, and so connotations (e.g. light signifying weight and optics). This disparity in feature representations affects sentiment classification tasks, language models, and more. Deep learning-based models—in particular, word embeddings—show promise in correcting for this, as they can adequately capture semantic relations and orientations for domain adaptation tasks.12

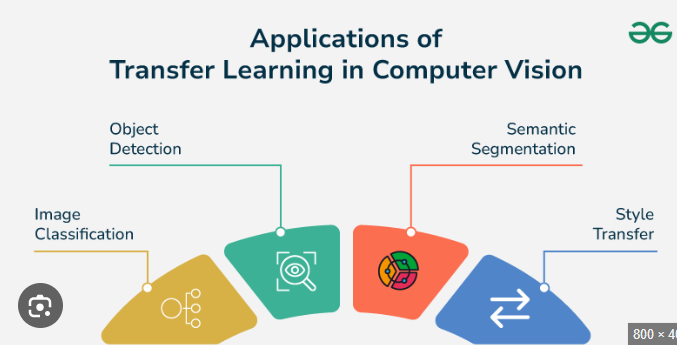

Computer vision

Because of difficulties in acquiring sufficient manually labeled data for diverse computer vision tasks, a wealth of research examines transfer learning applications with convolutional neural networks (CNNs). One notable example is ResNet, a pretrained model architecture that demonstrates improved performance in image classification and object detection tasks.13 Recent research investigates the renowned ImageNet dataset for transfer learning, arguing that (contra computer vision folk wisdom) only small subsets of this dataset are needed to train reliably generalizable models.14 Many transfer learning tutorials for computer vision use both or either ResNet and ImageNet with TensorFlow’s keras library.

Applications of Transfer Learning

- Image Classification: Transfer learning is commonly used in computer vision tasks, where models pre-trained on large datasets like ImageNet are adapted for specialized image recognition tasks.

- Natural Language Processing (NLP): Pre-trained models like BERT and GPT can be fine-tuned for specific NLP tasks such as sentiment analysis or question-answering.

- Medical Imaging: Leveraging pre-trained models to diagnose medical conditions using limited labeled data.

- Speech Recognition: Adapting models trained on large speech datasets for domain-specific voice recognition tasks.

- Anomaly Detection: Pre-trained models can be used to detect anomalies in various industries, such as manufacturing and finance.

Challenges and Considerations

- Overfitting: If the new dataset is too small, overfitting can occur. It is essential to use regularization techniques like dropout.

- Choosing Layers to Unfreeze: Deciding which layers to unfreeze for fine-tuning requires experimentation.

- Computational Resources: While transfer learning reduces overall training time, fine-tuning a large model still requires considerable computational power.

- Data Preprocessing: Ensure the input data is preprocessed in the same way as the original dataset used for pre-training (e.g., image resizing, normalization).

Tips for Effective Transfer Learning

- Start Simple: Begin with freezing most layers and only training the new layers added for the task. Gradually unfreeze some layers if more fine-tuning is needed.

- Use Regularization: Techniques such as dropout, batch normalization, and early stopping can help prevent overfitting.

- Data Augmentation: Enhance the training set by using transformations like rotation, flipping, and scaling to make the model more robust.

Conclusion

Transfer learning is a powerful method that allows models to learn effectively from pre-trained networks, saving time and improving performance, especially in scenarios with limited data. By adapting existing knowledge, transfer learning has become a cornerstone in the modern machine learning toolkit.

Transfer learning is an indispensable technique in modern machine learning, enabling practitioners to build robust models quickly and efficiently, even with limited data. By leveraging pre-trained models, machine learning development becomes faster and more accessible, making high-performing models feasible for various applications.

This comprehensive overview highlights the importance of transfer learning, its workflow, benefits, challenges, and practical tips to optimize its usage.